Open science is gaining momentum, and monitoring its adoption – across research activities and scholarly communications – is essential for its continued progress. To support OSMI’s work on open science monitoring, the OSMI Working Group 3 (WG3) ran a survey of scholarly content providers (publishers and journals, repositories and archives, and platform operators) seeking to understand motivations, current practices for monitoring open outputs (e.g., OA articles, datasets, software) and open outcomes (e.g., reuse, collaboration, societal engagement), and needs among those seeking to start monitoring. While not sector-representative, the sample captures practices across OSMI members and collaborators, and provides insights into implementation choices across organizations with different scopes and resourcing levels.

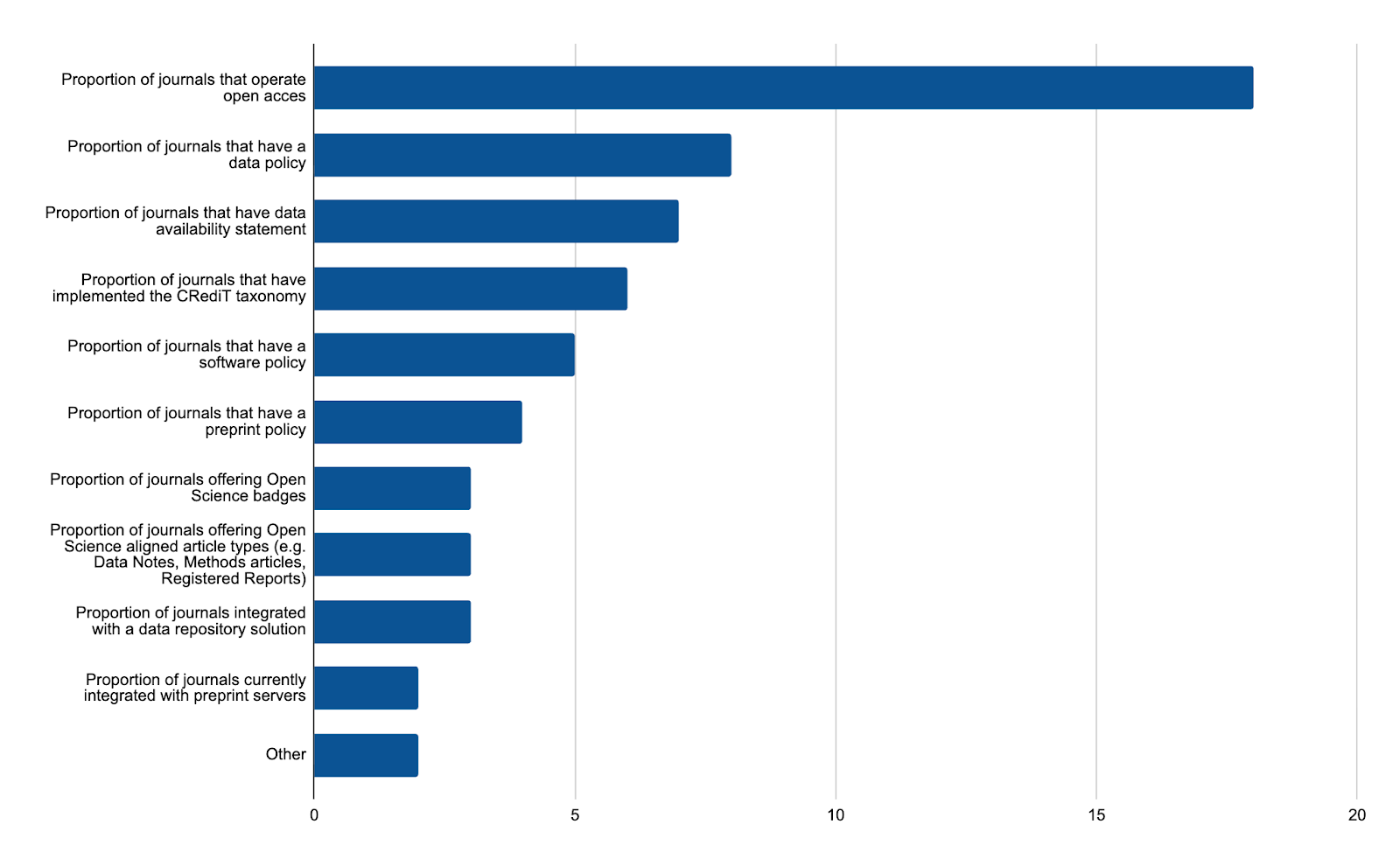

Among the respondents of the survey, the adoption signal is clear: 70.1% report conducting open-science monitoring, 23.4% do not, and 6.5% are unsure. Motivations to implement monitoring are primarily policy-aligned: compliance with open-science mandates, reporting to institutions and funders, and evidence gathering to inform policy and portfolio decisions. On the other hand, only a minority of content providers reported tracking the return-on-investment on open science. The measures used for monitoring reflect current policies and infrastructure (Fig. 1). Publishers commonly track adoption across their journal portfolio through measures such as the share of journals operating open access, the presence of data policies, and the prevalence of data availability statements. Indicators that require integrations with other platforms (such as those between journals and preprint servers or repositories) are used less often.

At the output level, indicators are widespread yet methodologically heterogeneous. Organizations count and segment open-access articles, datasets, and software; capture usage indicators such as citations, downloads, and views; and estimate the share of articles with associated data or code. Outcome monitoring is the exception rather than the rule: where present, it includes analyses of the use or reach of outputs enabled by openness – for example in the form of correlation with citations -, collaboration patterns, citizen-science and public-engagement activities, and diversity in participating communities. The result highlights different levels of maturity in practices for monitoring open science outputs and outcomes: abundant indicators for open objects, limited approaches for collecting evidence for downstream effects on science or society.

Methodological variation creates challenges for comparability. A single indicator such as “article with open data” is defined in diverse ways across providers: some accept any data presence, including supplemental files; others require repository-hosted datasets linked via PIDs. The methodologies to detect the presence of open objects associated with articles also differ, ranging from author-declared statements, to metadata sources or automated full-text mining. These choices signal the areas of open science monitoring that are more readily enabled by existing infrastructure, but also point to opportunities for greater alignment to facilitate cross-platform benchmarking, longitudinal analysis, and policy evaluation.

The survey also provided interesting clues from not-yet-monitoring organizations. Their hierarchy of needs is consistent. Funding is most frequently rated as “very important” to implement open science monitoring. Capacity needs are immediate: staff training, beginner-oriented guidance, and additional personnel. Standards and “data plumbing” are close behind: consistent indicator frameworks, open and PID-rich metadata, and normalization and retrieval protocols that make monitoring feasible to implement and maintain. OSMI invites you to read this extended post on the Upstream blog where findings are complemented with further observations and figures.

The OSMI WG3 will use these survey findings to guide its next steps, including documenting the benefits of open science monitoring for scholarly content providers, offering practical guidance for implementation, and developing recommendations for indicators to adopt.

Eleonora Colangelo (https://orcid.org/0009-0006-5741-1590) & Iratxe Puebla (https://orcid.org/0000-0003-1258-0746)